He Said, She Said… Which Tool is Telling the Truth?

If you are dealing with digital data, chances are you have run into this scenario more than once: one system shows X, another says Y.

One shows sales are flat, the other one shows a dip. Which system can you trust? Do you choose to believe in the better numbers to paint a prettier picture? Do you throw your hands up in despair, not knowing what to report, really?

Or do you break out your snorkeling mask and deep-dive into the murky data ocean, searching for individual visitors, orders or hits, to sort out what’s really going on?

As the deep-diving type, I wanted to share some experiences with you in a three-part series:

Part I: Taking It To The (Data) Source - Transitioning from one reporting system to another or adding another reporting tool.

Part II: Devi's In The Details - Dealing with revenue mismatches between clickstream and backend systems.

Part III: The Owls Are Not What They Seem - Comparing first- and third-party data.

Part I: Taking It To The (Data) Source

This is a story about data discrepancies that emerge when you transition from one reporting system to another, or when you add a new reporting tool.

Background:

In my early days as a junior web analyst at a digital analytics agency, one of our customers, a large real estate company, was advertising thousands of listings on nytimes.com. The client had made a decision to transition from traditional server log analysis to a brand new, client-side tagging implementation on their website.

The new implementation had been sold to them for all of its advantages: KPI identification through custom tags, improved spider filtering, consistent cookie identifiers, smaller log file storage requirements, etc. Understandably, the client was excited.

The Plan:

We were going to vet the new reports by simultaneously analyzing the data from the server logs and the client-side logs, to ensure the numbers produced by the two methods were “in-line”. Requirements were gathered, specs were written, tags implemented, the big day came… and… GULP!!! The numbers were nowhere near “in-line”. In fact, they were not in even in the same neighborhood.

To make matters worse, the reports from the server side logs were showing figures multiple times higher than the ones produced by the brand new client-side log analysis. No way was the customer going to sign off on this new method of data collection. We had prepared views of the data to try to estimate differences stemming from sessionization, robot filtering, internal IP's, etc… but none of these factors could explain such a huge delta.

The customer was not happy and they were simply not buying the new “wrong data”. And why should they?

What Solved It:

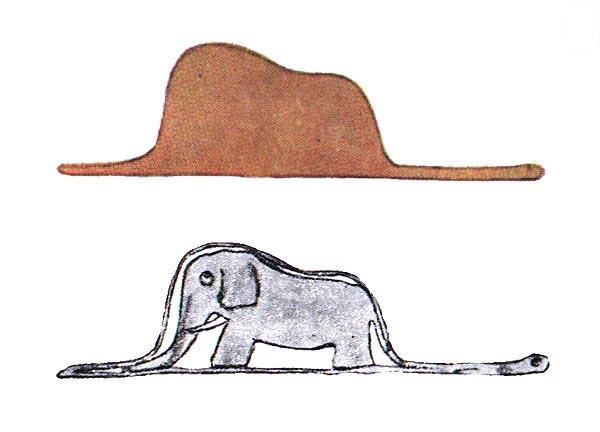

The big “a-ha” moment came when we focused on a segment of traffic - visitors referred by nytimes.com. This was perceived as a valuable segment of people coming to the site through links on nytimes.com. In reality, each nytimes.com listing had images of the corresponding property that were being hosted on our client's website. When a visitor saw a listing on nytimes.com, they were making a direct request for its image, thereby populating the server-side logs with activity which drastically inflated visits and visitor counts.

These visitors rarely clicked through to the client's actual website. The majority of visits reported by the server-side logs turned out to be visitors simply browsing on nytimes.com.

Part II. Devil's In The Details

This second story is about reconciling revenue data reported by a back-end transactional database and a clickstream solution.

Such data is usually closely scrutinized within an organization, so investigations concerning revenue are quite common. The more common cases usually have to do with modified shopping carts, testing of new flows or promotions that have not been tagged for analytics purposes. While the story itself is somewhat unusual, it illustrates a debugging method that is used quite often.

Background:

A nonprofit organization had been running a web analytics solution for a number of months. Their main KPI was revenue from donations. The revenue numbers had been slightly lower but trending practically identical with the back-end revenue reporting system until the last quarter of the year rolled around. And then the numbers started diverging—the back-end system showed a spike in revenue, while the analytics solution was reporting significantly lower numbers.

The Plan:

Our first suspect were the revenue tags. We made a small donation and inspected the tracking requests that were part of the flow. Nothing really jumped at us. Next step was to focus on a single day where the delta between the two systems was particularly wide. We pulled all the transaction IDs from both systems and started comparing them one at a time.

What Solved It:

The revelation came when we noticed records such as the ones highlighted in red:

| Backend System TransactionID |

Backend System Revenue |

Clickstream System TransactionID |

Clickstream System Revenue |

| ABC123 | $250.00 | ABC123 | $250.00 |

| XYZ456 | $100.00 | XYZ456 | $100.00 |

| HMN789 | $2,000.00 | HMN789 | $2.00 |

| KLZ123 | $400.00 | KLZ123 | $400.00 |

| DBA910 | $5,000.00 | DBA910 | $5.00 |

| ... | ... | ... | ... |

The clickstream system was reporting only 1% of the revenue for orders over $999.99. What happened was the comma inside numbers such as 2,000.00 was being passed to the tags of the clickstream solution and the solution was interpreting it as a decimal point. Such large transactions had not been tested during tag deployment, which allowed the defect to live in the production system for months.

The sudden divergence in revenue reported by the two systems was set off by seasonality. In the fourth quarter, donors were making larger donations to take advantage of tax benefits for the calendar year. Large donations triggered the bug and caused the clickstream solution to record only a fraction of actual revenue while the number of donations remained consistent with what the back end was showing.

Part III. "The Owls Are Not What They Seem"

In my experience, having to compare internal analytics data with external data provided by third parties about the company is not a daily thing. But when it does happen, there's usually a compelling need.

Sometimes, there are internal pressures to align "private" metrics with widely accepted standards. Or, if the third-party data is publicly accessible, it may influence public perception of the organization, affecting not only image and branding but also stock price.

Background:

A company had invested heavily in various strategies relating to traffic acquisition--SEO, partnerships, paid campaigns, you name it. The traffic increase was gradually being reflected in the company's internal analytics reports with visitor counts on the rise. Despite that, a popular audience measurement company distributing data about company websites was showing that the visitor counts for this particular website were flat, and even gradually declining.

The Plan:

We had a good understanding of the in-house analytics platform at the time. There were no major concerns with respect to the accuracy of the internally collected data. Traffic increase was real and verifiable. The plan was to learn all we could about the methodology the third party used when generating their reports, and more specifically their visitor metric.

What Solved It:

I wish I could call this a solution... it wasn't, it was more of a takeaway. The high-level steps alone of what it took to produce the visitor metric in this third-party vendor platform went something like this:

- Raw data was collected through a web beacon technology using cookies for unique visitor identification

- Through IP analysis - the data was filtered to US-only visitors (a 10% decrease)

- Further adjustments were made for things such as robotic activity or other disqualifying traffic patterns (a 7% decrease)

- The cookie-level data was then translated into visitor-level data through adjustments based on an ultra-secret "surveying methodology" (resulting in a 21% reduction)

- The visitor data was then even further adjusted to account for visitors who may not have interacted with the beacon technology, a number also derived through surveying methodology (a 7% add-back)

- Additional unknown data adjustments were applied for specific URLs lacking a "critical mass" of observations, resulting in some long-tail URLs not being counted at all

I am not trying to criticize this particular vendor, I'm just pointing out the conclusion / opinion we reached in our inquiry. The takeaway: There is no way to compare data points with the same name from two systems if one of those systems is a black box, applying secret sauce to industry standard metric definitions.

Some Further Lessons:

It is key to be able to understand how data is collected and processed before it ends up becoming a number on a pretty dashboard.Sometimes, you won't have visibility into all that goes into the number. There are proprietary, technical, and human obstacles in the way of complete transparency. But you should still be flashing your light as far down as possible.

Here is a checklist of questions to ask about data that will help you to better compare metrics produced by different platforms:

- What are the characteristics of this particular data source?

- Is the source of the data server-side, client-side, survey-sourced, database-driven, other?

- Does it require special tagging, and if so, is that tagging prone to omissions or human error?

- Is it subject to browser peculiarities, timing issues, conflicts with other data collectors?

- Are all requests being recorded? Is robotic or internal activity identified intelligently? Any exclusions based on response codes, IPs, file extensions, User Agents etc?

- Does the data get moved around before it gets analyzed? If so, what are the schedules for log file pulls, rotation, archiving? Can failures in these processes undermine accuracy?

- If you are dealing with survey or sampled data - what is the sample size? Survey method? Respondent selection used? What adjustments may have already taken place vs. the adjustments you yourself need to make?

- Are there any timezone offsets between recorded time and reported time? If so, do you have a way to ensure they don't affect reported time periods?

- Can the raw data be manually inspected? Do you have access to the individual records and can you review them? Is the data using some sort of tool-specific compression or can you review the individual fields that enter analysis?

- Can you view the reports as data is being generated in real time? If so, are there ways to identify your own activity, either within the raw data or directly in the reports?

- Do you have a complete understanding of all the transformations that the data goes through after it has been loaded for analysis and before it appears in a report or dashboard?

- Can you compare data between two systems at the most granular level (order/transactionID, individual hits, URLs, login IDs, etc)? If not, are there steps you can take to make this possible?